Inventory 14 Dutch Ministries Netherlands Algorithm Registry

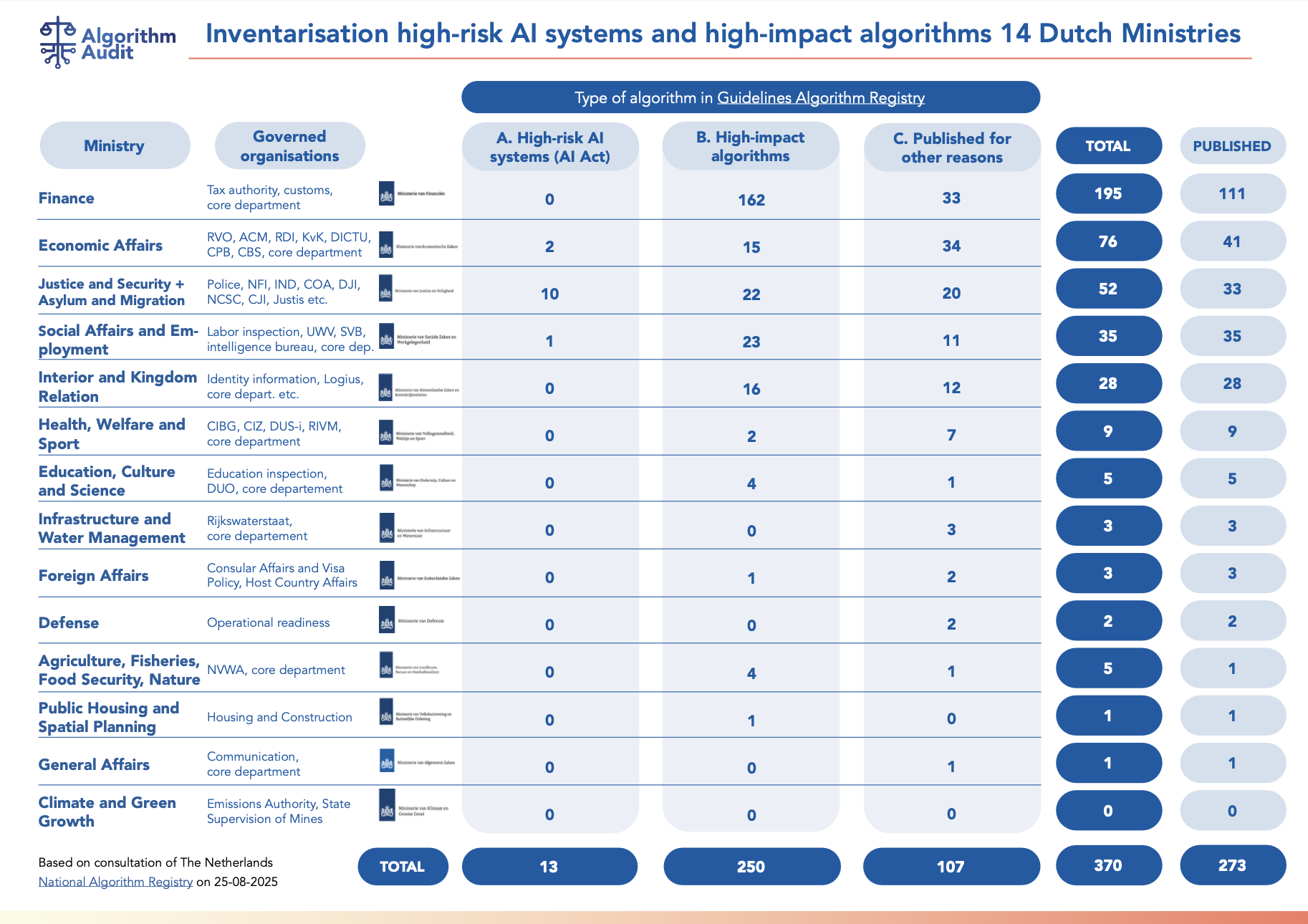

Last summer, 14 Dutch Ministries published their inventories of high-risk AI system and high-impact algorithms in the Dutch National Algorithm Registry. The results are summarized below ⬇️

😮 About 3,5% of the 370 inventoried algorithms are classified as high-risk AI system. This is far below the European Commission’s estimate that around 15% of algorithmic systems would fall in this category.

❓It remains unclear whether the inventories were conducted in a structured and consistent way. This raises concerns about the correctness and completeness of both the identification and risk classification of AI systems

For instance:

- Monocam of the Dutch Police: published as a ‘high-impact algorithm’ instead of ‘high-risk AI system’. According to the AI Act Annex III 6(d) it should qualify as high-risk, since the system caluclates a likelihood of an offence (phone use while driving).

- In the letter of Economic Affairs, it’s stated that no algorithms were inventoried at ICTU, while 1 algorithm is listed in the registry

- 273 out of 370 systems of Dutch national institutions are now publicly listed in the Algorithm Registry, a significant step towards more transparency.

- The Ministry of Defence identified zero AI systems. This highlights the absence of democratic oversight for AI deployed for security and warfare context

More algorithms under governance = greater need for consistent AI oversight 🧮 That’s why Algorithm Audit offers a free, open-source tool to identify and classify algorithmic systems, already used by Dutch public sector bodies: https://algorithmaudit.eu/technical-tools/implementation-tool/#tool